BlockScrapers

BlockScrapers

BlockScrapers

BlockScrapers

No DNS changes

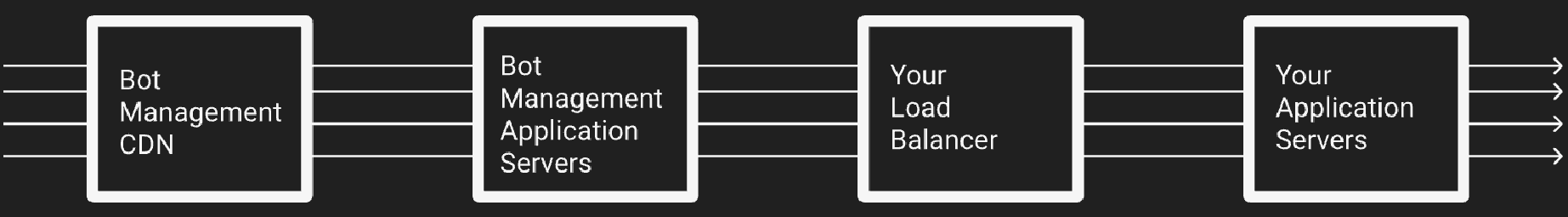

No DNS changes or additional infrastructure needed. Integrates directly with your existing AWS CloudFront. Set up in 5 minutes or less.

No DNS changes

No DNS changes or additional infrastructure needed. Integrates directly with your existing AWS CloudFront. Set up in 5 minutes or less.

Eliminate the cruft

We just block scrapers and don't have all the bells and whistles others do. Enables use to charge magnitudes less & still block scrapers in minutes.

Eliminate the cruft

We just block scrapers and don't have all the bells and whistles others do. Enables use to charge magnitudes less & still block scrapers in minutes.

Admin Console

Set up custom rules for allowing/blocking traffic. One-click support for good bots & common third party integrations (Googlebot, Bingbot, Stripe, AWS SNS, etc).

Admin Console

Set up custom rules for allowing/blocking traffic. One-click support for good bots & common third party integrations (Googlebot, Bingbot, Stripe, AWS SNS, etc).

Machine Learning

Bots scrape massive amounts of images, videos and text for training data.

Machine Learning

Bots scrape massive amounts of images, videos and text for training data.

Price scraping

Bots scrape your prices, inventory list, and product views.

Price scraping

Bots scrape your prices, inventory list, and product views.

Scoreboards

Bots scrape your live scoreboards, leaderboards, and tournament schedule.

Scoreboards

Bots scrape your live scoreboards, leaderboards, and tournament schedule.

DDoS

Bots can crash your site or bring it down to a crawl.

DDoS

Bots can crash your site or bring it down to a crawl.

Forums & Blogs

Bots scrape your user's posts, user details, and will submit mass spam.

Forums & Blogs

Bots scrape your user's posts, user details, and will submit mass spam.

Password Stuffing

Bots will use your site to validate millions of credit cards and passwords.

Password Stuffing

Bots will use your site to validate millions of credit cards and passwords.

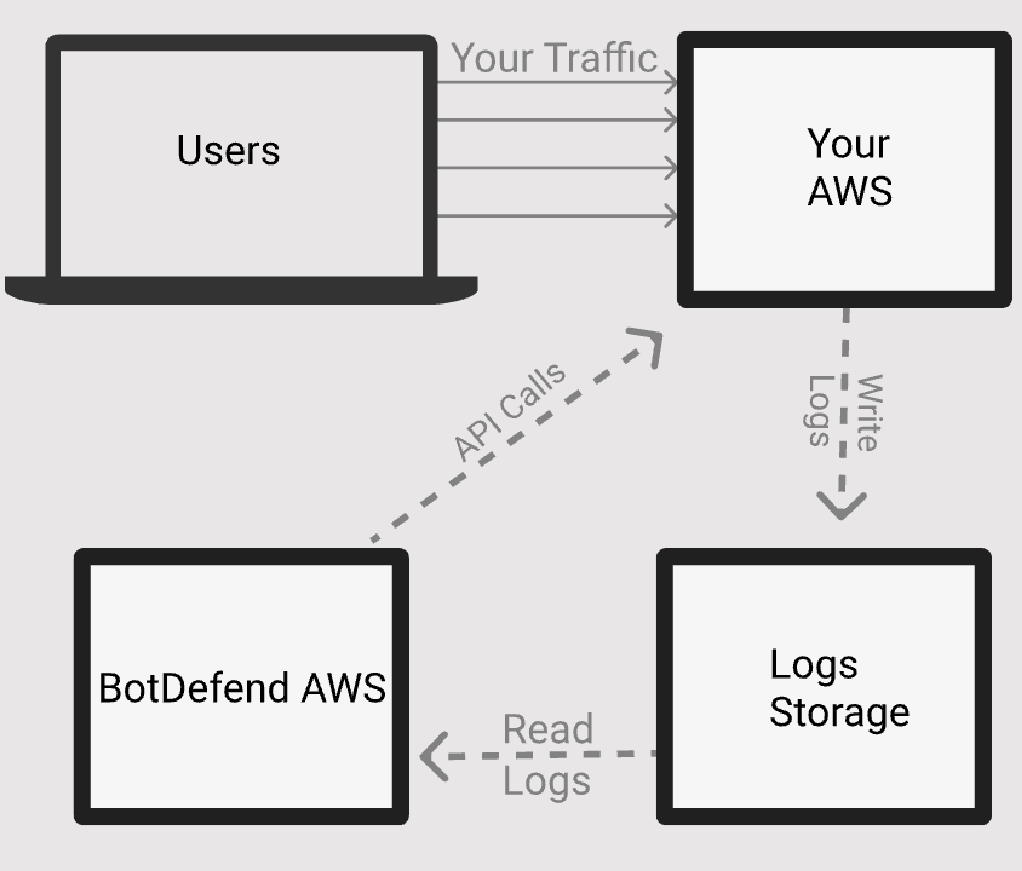

Other solutions (CloudFlare, Akamai, Imperva, Reblaze, etc) proxy every request in real-time, which is expensive & adds latency. To do this, they require heavy infrastructure or DNS changes

BlockScrapers just processes the logs, which significantly lowers the price and provides a similar level of defense. BlockScrapers requires no DNS changes or proxying infrastructure.

Other solutions (CloudFlare, Akamai, Imperva, Reblaze, etc) proxy every request in real-time, which is expensive & adds latency. To do this, they require heavy infrastructure or DNS changes

BlockScrapers just processes the logs, which significantly lowers the price and provides a similar level of defense. BlockScrapers requires no DNS changes or proxying infrastructure.